Statistics

Statistical measurements within VisionPro Deep Learning are used to evaluate the trained neural network's performance. Within the deep learning paradigm, evaluation refers to the process of evaluating a trained neural network model against a test data (the data labeled by the user but not used in training). So, if you want to determine availability and performance of a neural network model through statistical metrics, these metrics must be calculated only on the test data.

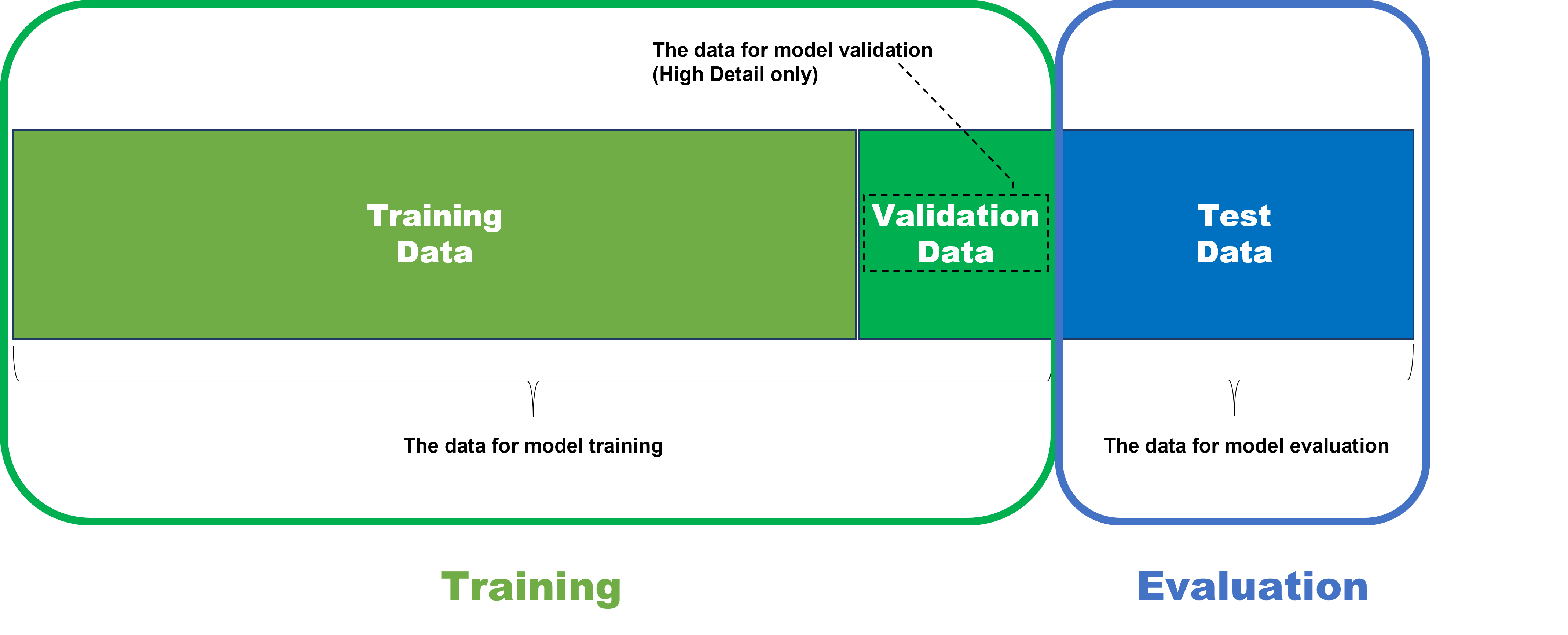

It is important to understand that after training of your neural network model, which means your tool (Blue Locate/Read, Green Classify, or Red Analyze), if you want to check out how the model is well trained, you are not allowed to test the model against the data used for training this model. The training data cannot be employed in the evaluation of a trained model because the model was already fitted to this data during training, to make the best performance given the training data set. So, this data cannot tell how the model generalizes well enough and also desirably performs when it meets unseen, fresh data.

Therefore, to test the model's availability and performance fairly and correctly, the model should be applied to the data that it has never seen before including its training phase. That is why the data for model evaluation is called test data set.

Note that the test data set is out of training scope while the validation data set is included in the training phase. The validation data is part of the training set and its purpose is among many models generated from the training data choosing the best model as the final output of training. For High Detail modes, the validation loss (=the loss calculated from the validation data) is calculated for each model during the training phase, and the model who gives the best loss in terms of performance and availability is finally selected as the result of training. Again, the validation data is only used in the Green Classify and Red Analyze High Detail modes. The tools with the other modes of operation do not use the validation data and so they choose the best model with the loss calculated from the training data.

The use of statistical metrics for each tool in VisionPro Deep Learning help qualify the following:

- Estimate future performance, for example, estimate rates of false positives.

- Optimize tool parameters by finding good parameter combinations or setting various thresholds.

- Test the reproducibility of model results.

The topics in this section will help you to understand the metrics output by the Cognex VisionPro Deep Learning Tools:

- Score Histogram

- Receiver Operating Characteristic (ROC) Curve and Area Under the Curve (AUC)

- Confusion Matrix

- Accuracy, Precision, Recall and F-Score

The performance of a deep learning-based neural network model cannot be evaluated based on either of the following:

- A "grade" of the quality of the neural network model

- A neural network has no grade in terms of its quality.

- A "score" that is an output of the neural network model

- A neural network has several metrics that present its performance in a few different angles, but there is no single value that absolutely stands for a neural network's fitness and performance.