Configure Multiple GPUs

Except under very narrow circumstances, using multiple GPUs in a single system will not reduce Deep Learning tool training or processing time. What multiple GPUs can do is the following:

- Increase system throughput when your application uses multiple threads to concurrently process images.

- Increase training productivity, by allowing you to train multiple tools at the same time.

If you want to set up multiple GPUs, all GPUs should be of the same type and of the same specification. For example, if you try to set up 3 GPUs including 1 RTX 3080 10GB, then this set of GPUs should be RTX 3080 10GB / RTX 3080 10GB / RTX 3080 10GB. It applies to all the NVIDIA GeForce® series.

When configuring a host system for multiple GPUs, keep the following in mind:

- The chassis may need to provide up to 2KW of power.

- NVIDIA RTX / Quadro® and Tesla cards provide better cooling configuration for multiple-card installations.

- Make sure that the PCIe configuration has 16 PCIe lanes available for each GPU.

- Do not enable Scalable Link Interface (SLI).

Multiple GPUs Utilization

VisionPro Deep Learning 3.2 supports the use of multiple GPUs for training and processing. This topic will explain the 2 basic modes of operation and the utilization of multiple GPUs. For convenience of further explanation on this page, here High Detail modes and Focused modes each include the following tools. This definition of including High Detail Quick modes within High Detail Modes family stands only for this page in this document.

High Detail Modes

-

Red Analyze High Detail

-

Green Classify High Detail

-

Green Classify High Detail Quick

Focused Modes

-

Red Analyze Focused Supervised

-

Red Analyze Focused Unsupervised

-

Blue Read

-

Blue Locate

-

Green Classify Focused

Modes of Operation

When you utilize one or more GPUs for VisionPro Deep Learning, the possible GPU Modes of operation are:

- SingleDevicePerTool (default setting) – A single GPU is used for training and processing an image.

- NoGPUSupport – Specifies that a GPU will not be used.

These GPU modes can be specified on the initialization of the library through the C and .NET API, and command-line arguments that can be used with the VisionPro Deep Learning GUI on startup for library initialization.

Multiple GPUs for Training

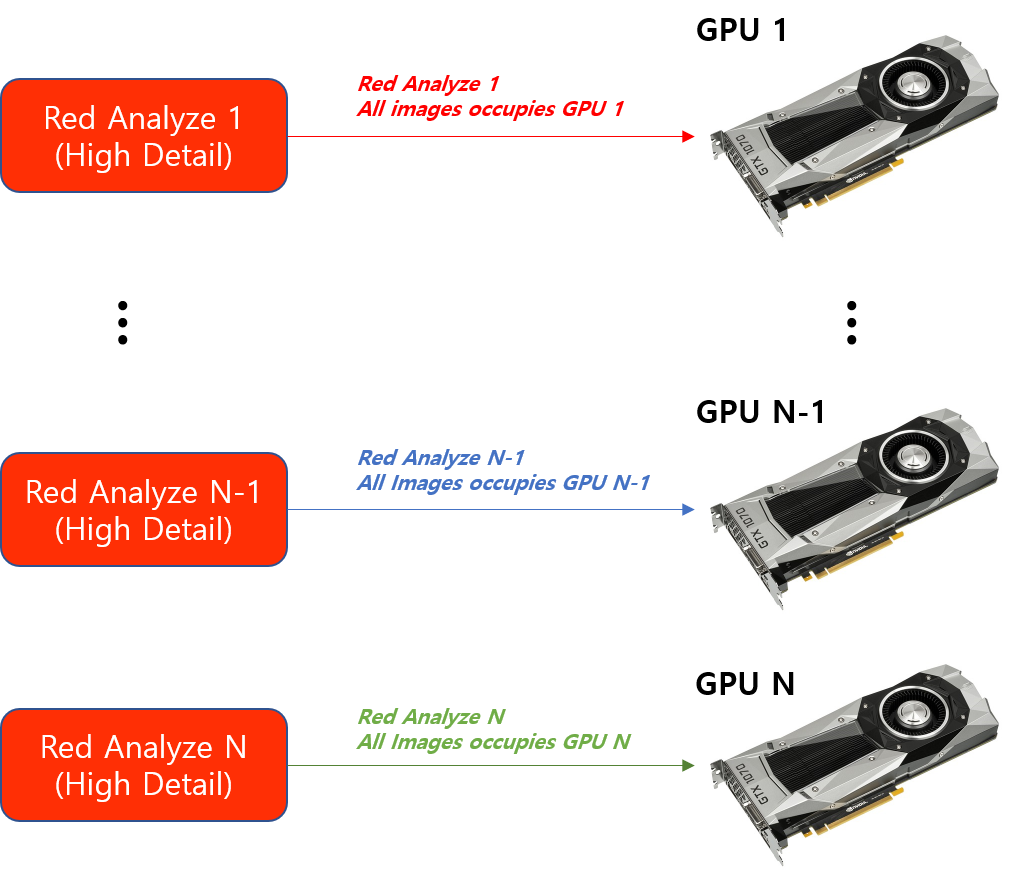

When utilizing multiple GPUs for training, whether you are using Focused modes or High Detail modes, a single tool is trained on only a single GPU.

If you have

-

N GPUs, you can run N training jobs concurrently.

-

N GPUs, you can train N tools at the same time.

This means that if you have N GPUs and N training jobs (N tools to be trained), 1 training job is taken only by 1 GPU. In other words, 1 GPU takes 1 tool (all the images of a tool to be trained) at a time.

The training jobs in another Stream or another Workspace can be accessed by the multiple GPUs registered in your system and thus they also can be executed concurrently with multiple GPUs.

Multiple GPUs for Processing

When utilizing multiple GPUs for processing, the situation is somewhat different.

-

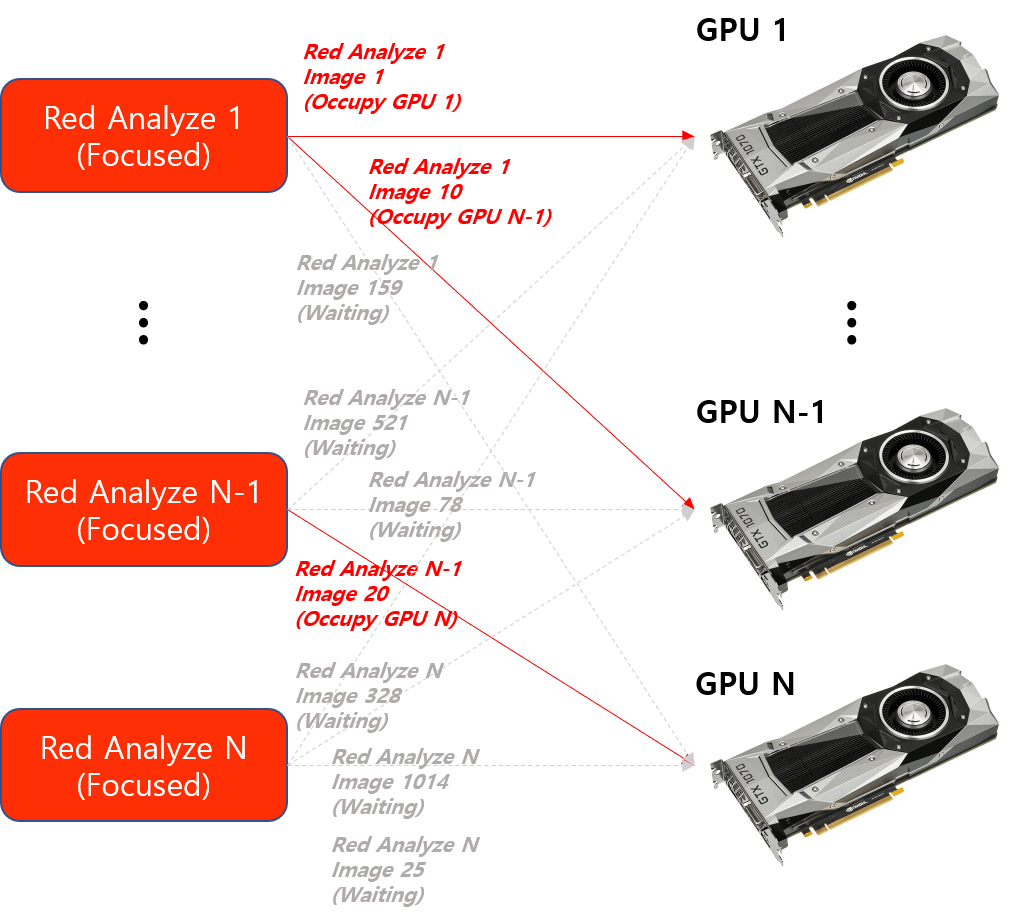

Focused modes

-

A single tool is concurrently processed on all the available GPUs registered on your system.

-

-

High Detail modes

-

A single tool is processed on only a single GPU.

-

This suggests several important things:

-

For the processing with the focused modes, if you have

-

N GPUs and if you run 1 processing job (processing 1 focused mode tool), you can run this job in a distributed way on these N GPUs.

-

N GPUs and if you run N processing jobs (processing N focused mode tools), you can run these N jobs in parallel on these N GPUs.

-

-

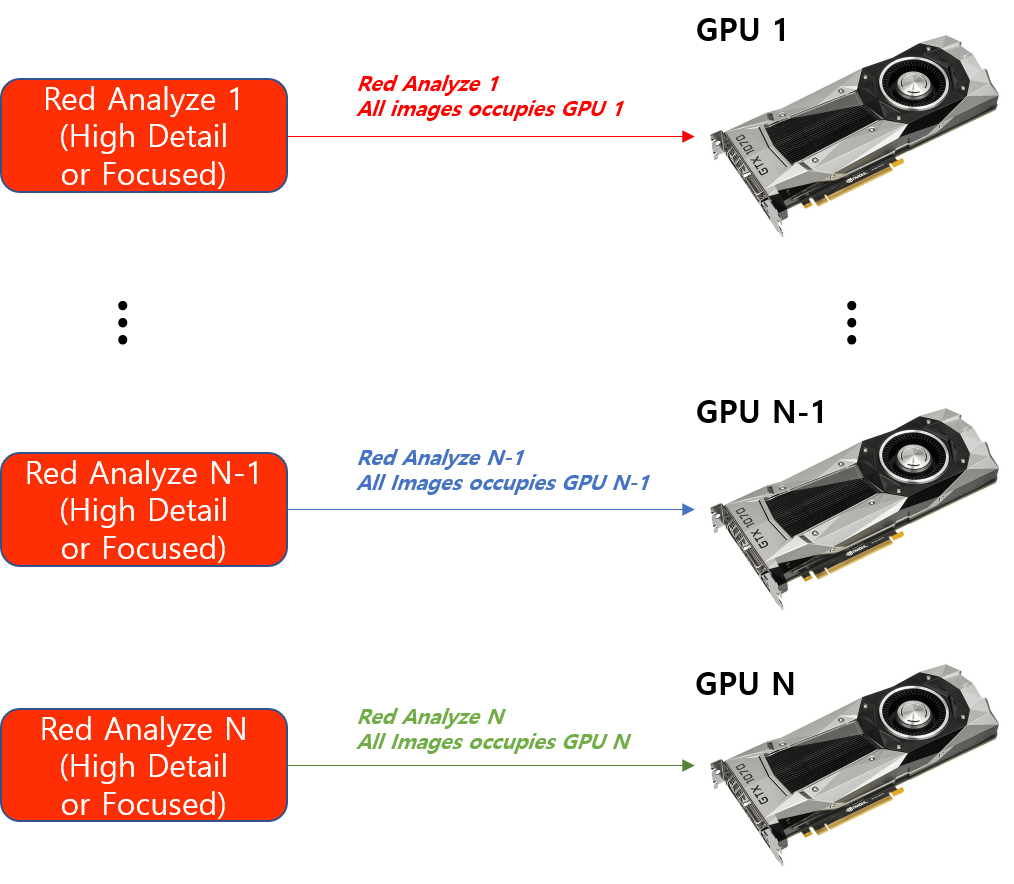

For the processing with the high detail modes, it is basically no different from the training. If you have

-

N GPUs, you can run N processing jobs concurrently.

-

N GPUs, you can process N tools at the same time.

-

For the processing of a focused mode tool, this means that all the images of this tool can be distributed on N GPUs (1 image on 1 GPU, one at a time). If you have N focused mode tools and N GPUs, it still works in the same way so N GPUs are utilized in the way that each GPU takes an image at a time, not a tool.

For the processing of a high detail mode tool, this means that the images of this tool can't be distributed on N GPUs and all the images of this tool can only be processed by 1 GPU (1 tool on 1 GPU, one at a time). But, if you have N high detail mode tools and N GPUs, N GPUs can concurrently be utilized in the way that each takes a tool (all the images of a tool) at a time, not an image.

Again, for both Focused and High Detail modes, the processing jobs in another Stream or another Workspace can be accessed by the multiple GPUs registered in your system and thus they also can be executed concurrently with multiple GPUs.