Developers

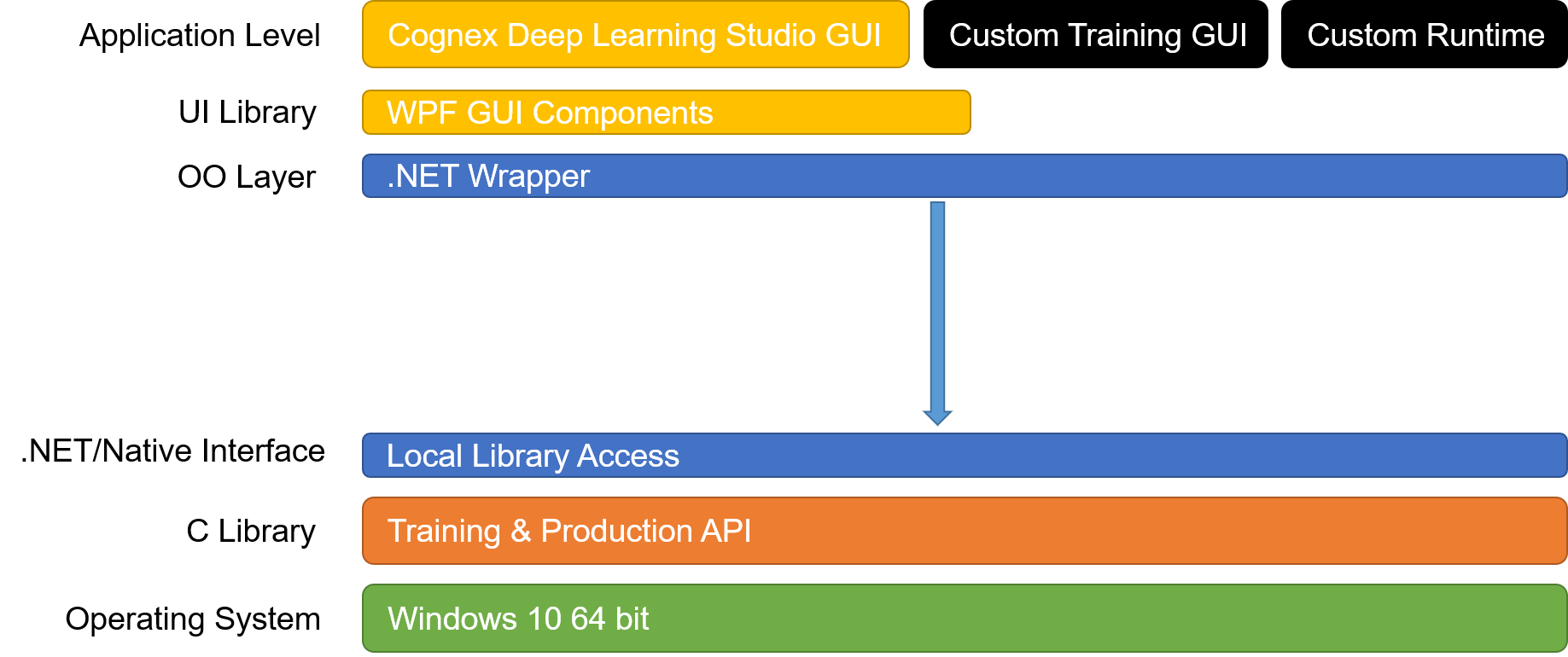

The topics in this section will help the developer integrating the software. The VisionPro Deep Learning software is based on the following architecture:

C or .NET Integration

VisionPro Deep Learning provides a C library, which can be found in your installation directory (C:\Program Files\Cognex\VisionPro Deep Learning\3.2\bin), under the name vidi_51.dll. The associated header files are in the following directory:

- C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Develop\include

For convenience and easier integration, VisionPro Deep Learning also distributes a .NET library that wraps all of the C library methods in an object oriented approach. The .NET library is distributed as NuGet packages, which are in the following directory:

- C:\ProgramData\Cognex\VisionPro Deep Learning\3.2 \Examples\packages

The API reference guides are located in the following directory:

- C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Develop\docs

In addition, there are several examples to review in the following directory:

- C:\ProgramData\Cognex\VisionPro Deep Learning\3.2\Examples

- In the above directory paths and file names, 2.0 refers to the revision of the VisionPro Deep Learning software.

- Cognex highly recommends that you integrate the .NET library, unless you are forced to integrate the C library.

.NET Libraries

.NET libraries are also available for VisionPro Deep Learning. These also contain access to the algorithms, as well as other structures that can be used through the project to interact and operate on images using VisionPro Deep Learning. UI components libraries are also available for interacting with and displaying results and images.

The .NET Libararies are located in:

-

C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Cognex Deep Learning Studio

| Library | Description |

|---|---|

| ViDi.NET.Interfaces.dll | Assembly containing the interfaces. |

| ViDi.NET.Base.dll | Assembly containing common types. |

| ViDi.NET.dll | Assembly containing an implementation of the interfaces. |

| ViDi.NET.Local.dll | Direct access to the local C library. |

| ViDi.NET.Remote.dll | Assembly containing an implementation for communication between Server/Client. |

| ViDi.NET.UI.Interfaces.dll | Interfaces of the WPF components. |

| ViDi.NET.UI.dll | WPF components. |

Command Lines

The topics in this section cover the various command line arguments that can be supplied to the VisionPro Deep Learning application when it is invoked.

The name of the service application you will be issuing the commands to is Cognex Deep Learning Studio.exe (IDE application) or VisionPro Deep Learning Service.exe (if you are using the Deep Learning Client/Server Functionality).

The following paths are the locations for the above applications:

- C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Cognex Deep Learning Studio\Cognex Deep Learning Studio.exe

- C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Service\VisionPro Deep Learning Service.exe

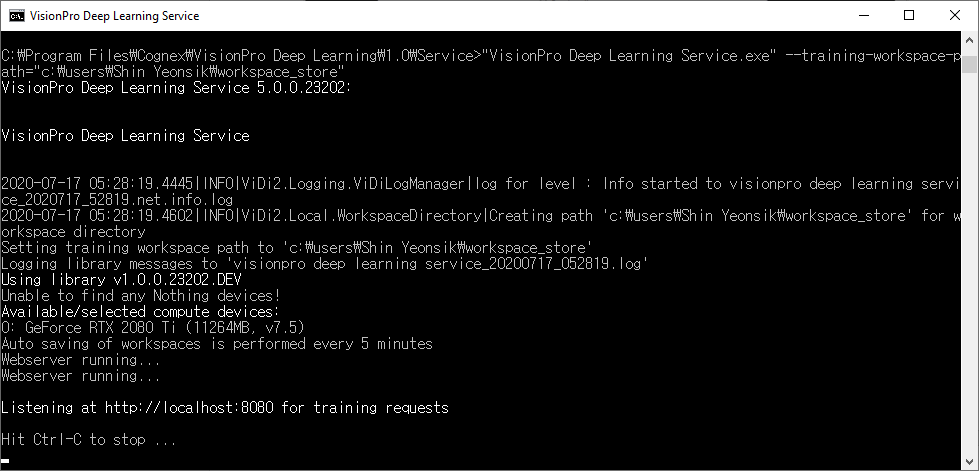

You issue the commands by first specifying the name of the application, followed by the command line arguments. For example, if you wanted to change the default training workspace path, after opening a Windows Command Prompt and changing directories to the Program Files directory, you would issue the following command:

C:\Program Files\Cognex\VisonPro Deep Learning\3.2\Service>"VisionPro Deep Learning Service.exe" --training-workspace-path=c:\users\username\workspace_store

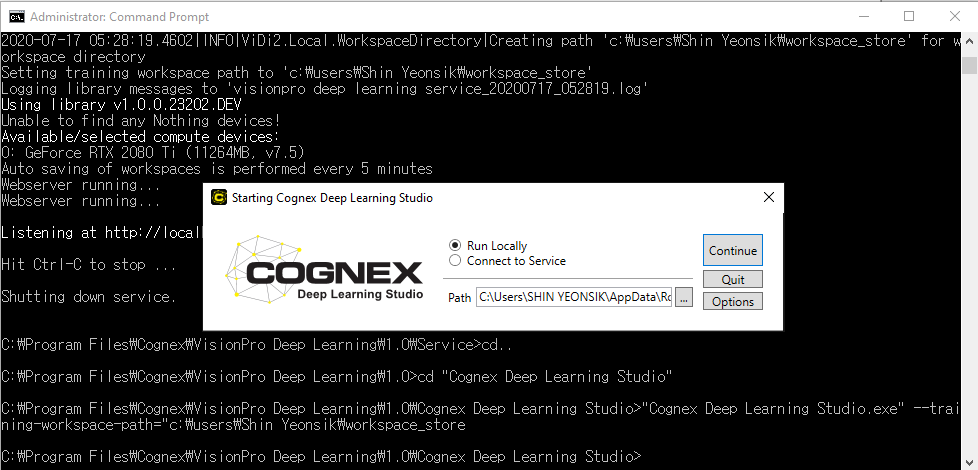

When issuing commands to the Deep Learning GUI, after initializing the command, the GUI launcher screen will appear, and you will not receive acknowledgment of the command.

Workspace and Application Command Line Initialization

There are several command line arguments that can be used with the VisionPro Deep Learning GUI on startup for workspace and application initialization.

The commands are issued using Windows Command Prompt, and navigating to this directory for Cognex Deep Learning Studio.exe(IDE application):

-

C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Cognex Deep Learning Studio

Navigate to this directory for VisionPro Deep Learning Service.exe (if using the Deep Learning Client/Server Functionality):

-

C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Service .

You issue the commands by first specifying the name of the application followed by the command line arguments.

The following command line arguments can be issued to define the path of a workspace, and additional application related commands:

| Command | Description |

|---|---|

|

--workspace-path=file path |

Specifies the directory location where the workspace will be saved. |

|

--auto-save-interval=duration in minutes |

Specifies an amount of time between auto-saves of workspaces. The default is 5 minutes. Set to -1 to never auto-save. Note: The auto-save feature does not apply to runtime workspaces. Therefore, if the --runtime-only command is activated while the --auto-save-internal command has been set, the auto-save feature will be ignored.

|

|

--activate-debug-logs=[0 or 1] |

Activates logging for debugging purposes. |

|

--help |

Used to return a list of command line options. |

|

--version |

Requests version information. |

GPU Mode Command Line Initialization

There are several command line arguments that can be used with the VisionPro Deep Learning GUI on startup for library initialization.

The commands are issued using Windows Command Prompt, and navigating to this directory for Cognex Deep Learning Studio.exe(IDE application):

-

C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Cognex Deep Learning Studio

Navigate to this directory for VisionPro Deep Learning Service.exe (if using the Deep Learning Client/Server Functionality):

-

C:\Program Files\Cognex\VisionPro Deep Learning\3.2\Service .

You issue the commands by first specifying the name of the application followed by the command line arguments.

The following command line arguments can be used to control the GPU Mode, which GPU device to use and the allocation of GPU memory:

| Command | Description | ||||

|---|---|---|---|---|---|

|

--gpu-mode=NoSupport or SingleDevicePerTool |

Specifies the GPU mode to be used by the application.

Note: See Configure Multiple GPUs for more information about the GPU modes.

|

||||

|

--gpu-devices=comma separated indexed list of GPUs |

Specifies the GPUs that will be used on initialization, via an indexed list. For example: --gpu-devices=0,1 |

||||

|

--optimized-gpu-memory=memory size, in MB |

Specifies the size of the pre-allocated optimized memory buffer. This setting is activated by default, with the default size of 2GB. To deactivate the feature, first issue the --optimized-gpu-memory-override=1 command, and then --optimized-gpu-memory=0 command. To use a memory buffer size other than the default setting, first issue the --optimized-gpu-memory-override=1 command, and then the --optimized-gpu-memory=<memory size in MB> command. Note: The GPU Memory Optimization setting is enabled by default. For more information about the functionality, see the GPU Memory Optimization.

|

||||

|

--optimized-gpu-memory-override=[0 or 1] |

If using the --optimized-gpu-memory setting, set to 1. If set to 0, the default amount of memory will be allocated. |