Blue Locate

About Blue Locate

The Blue Locate tool is used to identify and locate specific features or groups of features in an image. The output of the tool can be used to provide positional data for other downstream Deep Learning tools. When using the tool, you provide a Training Image Set, and then identify the features within the images.

is used to identify and locate specific features or groups of features in an image. The output of the tool can be used to provide positional data for other downstream Deep Learning tools. When using the tool, you provide a Training Image Set, and then identify the features within the images.

You can also create two different types of models using the tool. The Layout Model provides the ability to check for the presence of features, and verification of the correct number of instances of one or more features in a region. Also, the Node Model can be generated, which defines the spatial relationship among a group of features.

The neural network architecture of Blue Locate is Focused, so it has all the properties of the Focused architecture, like Red Analyze Focused Supervised and Green Classify Focused tools.

| Blue Locate | |

|---|---|

| Image Dataset Composition | Training Set, Test Set |

| Monitoring Validation Loss | X |

| Loss Inspector | Not supported |

Training Workflow for Blue Locate: Generating a Pose

The Blue Locate Tool is used to identify trained features in images. The tool can be used simply to identify the features, or they can be used to generate a pose for downstream tools. See VisionPro Deep Learning Tool Chains for more details of downstream tools. When a tool is in Blue Locate mode, the training workflow of the tool for generating a pose is:

-

Launch VisionPro Deep Learning.

-

Create a new workspace or import an existing one into VisionPro Deep Learning.

-

Collect images and load them into VisionPro Deep Learning.

-

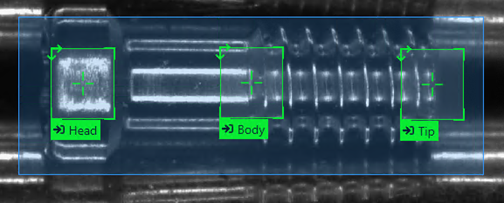

Define ROI (Region of Interest) to construct Views. If necessary, adjust the ROI of the tool.

- Configure Features parameters and Sampling parameters before labeling.

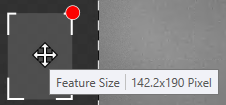

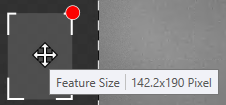

In the bottom-left corner of the display, there is a circle graphic that graphically illustrates the size of the Feature Size parameter setting.

This circle graphic can be moved into the ROI and placed over the largest feature. Then, using the drag handle, you can adjust the Feature Size so that it approximately covers the feature.

Note: For more information, refer to the Configure Feature Parameters and Sampling Parameters.

Note: For more information, refer to the Configure Feature Parameters and Sampling Parameters.

-

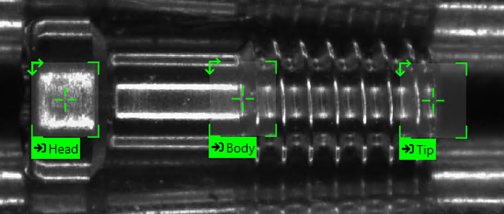

Within the ROI, while hovering over the image, the mouse-cursor will have a cross-hairs icon which is used to place the feature label of the Blue tool. Click on a feature to label it.

Note: For more information, refer to the Create Feature Label (Feature Labeling). -

The feature label will have a default character of 0. Enter in a meaningful character (A-Z, 0-9, up to 140 characters) to identify the feature. For identical features, you can use the same identifier.

If there is more than one feature that you want to find in the image, identify and label each feature.

-

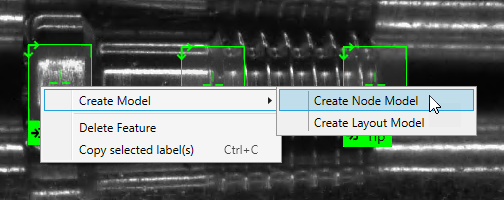

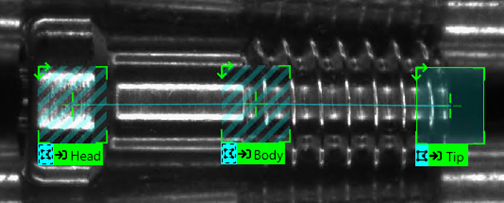

Create a model out of the labeled features. When used to generate a pose, you create a Node Model, which is used to output a transform that can be applied to any Views that are referencing that node model. A node model can be created of a single feature or a combination of multiple features.

Note: For more information about node models, refer to the Blue Locate Node Model.-

Select the labeled features that will compose the nodes of the model. To create a node model of multiple features, hold down the Shift key while selecting the labeled features, or hold down the Shift key and drag your mouse over the desired features.

-

After selecting the labeled features, right-click on the View and from the menu, click on Create Model and select Create Node Model.

-

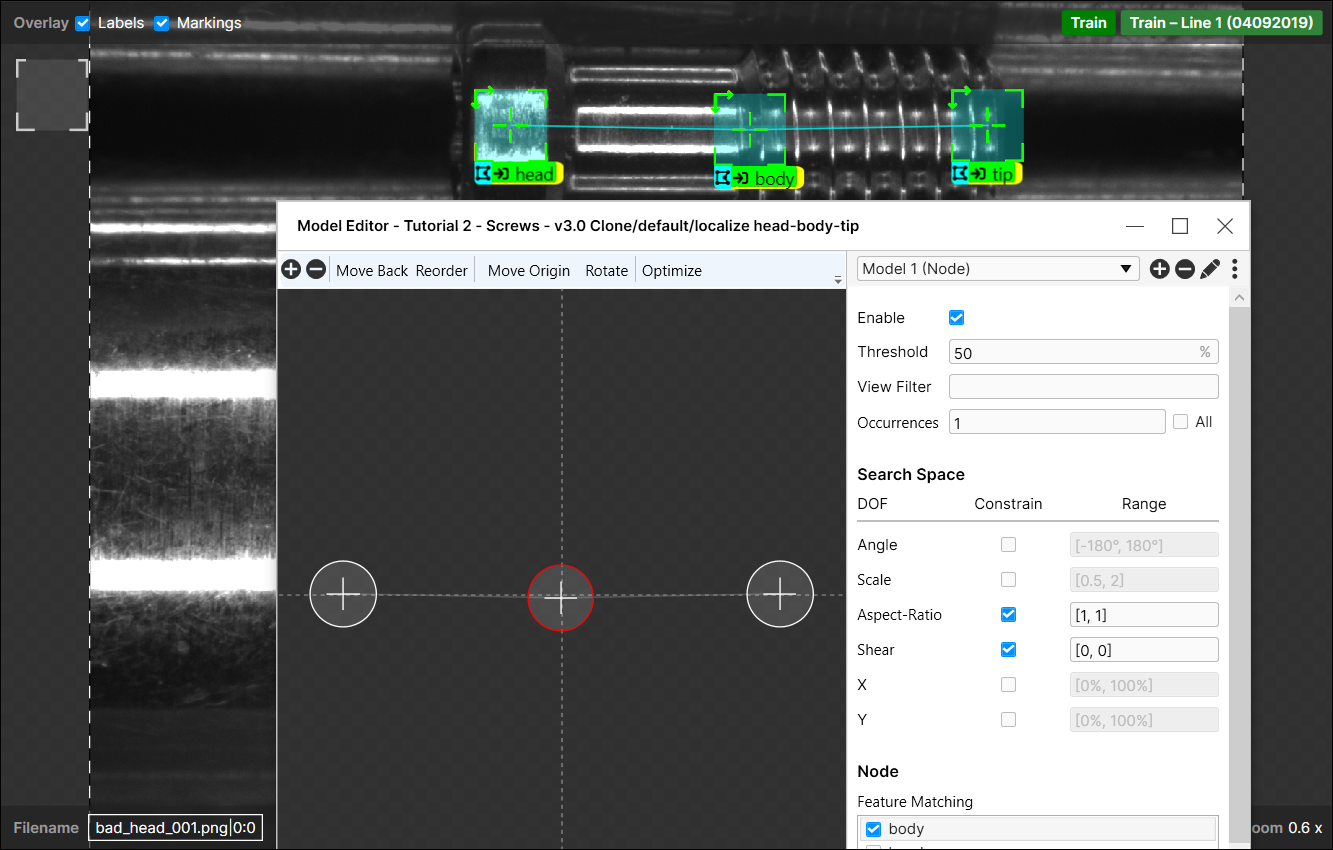

This will automatically use the selected features and area as the nodes for the model, based on the input image, and open the Model Editor dialog.

Note: See the Node Model Editor for more information about configuring the parameters of the node model.

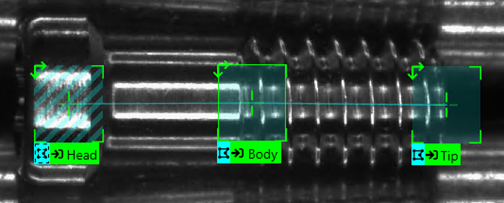

Note: See the Node Model Editor for more information about configuring the parameters of the node model. - With the node model created, you can now label the rest of the images/views based on the node model.

-

The node model will be based on the last labeled feature when you generated the node model. Place this label on the feature, and the tool will automatically generate the node model.

-

The other nodes will indicate that they are potential parts of the node model. Select each feature to label each instance of the node.

Note: For more information about the color codes of the labels, see the Review Markings.

- Label the rest of the images/views based on the previous two steps.

-

Press the Process icon of the tool to apply the model to all of the images.

-

-

Once you have applied the node model to several of the images, press the Brain icon to train the tool.

Tip: In addition to the following tips, consider using the bootstrap method of labeling your images. "Bootstrap" labeling refers to the process of beginning your labeling process by just labeling a small sample size of your images, then training the tool, and reviewing the results by accepting views with correct markings to convert them into labels, and removing incorrect markings and replacing them with correct labels. You can speed up this process further by dividing images into multiple views and only labeling a single view. In addition, you can also temporarily reduce the Epoch Count parameter (one of the ), which will make the training time shorter. - After training, review the results by going through all of the images and determining whether or not the tool correctly marked the features and generated the appropriate model in the image.

-

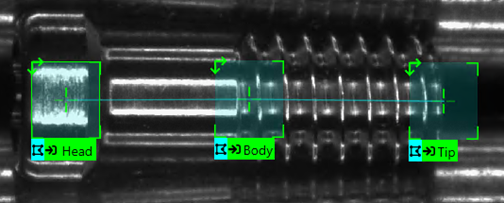

Go through all of the images and determine whether or not the tool correctly marked the features in the image.

- If the tool has correctly marked the features and the model, right-click the image and select Accept View.

-

If the tool has incorrectly marked the features or model, failed to identify a present feature or generated mismatches, do the following:

Note: If the tool has placed one or more mismatched features (indicated by an orange outline), refer to the Mismatches topic.- Right-click the image and select Accept View.

- Right-click the image again and select Clear Markings and Labels.

-

Manually re-label the features in the image based on steps E through F above.

- If you encountered the scenario in (A.), you are ready to use the tool. If you encountered the scenario in (B.), you will need to retrain the tool and repeat the previous two steps.

Training Workflow for Blue Locate: Identifying Features

The Blue Locate Tool is used to identify trained features in images. The tool can be used simply to identify the features, or they can be used to generate a pose for downstream tools. See VisionPro Deep Learning Tool Chains for more details of downstream tools. When a tool is in Blue Locate mode, the training workflow of the tool for identifying a feature is:

-

Launch VisionPro Deep Learning.

-

Create a new workspace or import an existing one into VisionPro Deep Learning.

-

Collect images and load them into VisionPro Deep Learning.

-

Define ROI (Region of Interest) to construct Views. If necessary, adjust the ROI of the tool.

- Configure Features parameters and Sampling parameters before labeling.

In the bottom-left corner of the display, there is a circle graphic that graphically illustrates the size of the Feature Size parameter setting.

This circle graphic can be moved into the ROI and placed over the largest feature. Then, using the drag handle, you can adjust the Feature Size so that it approximately covers the feature.

Note: For more information, refer to the Configure Feature Parameters and Sampling Parameters.

Note: For more information, refer to the Configure Feature Parameters and Sampling Parameters.

-

Within the ROI, while hovering over the image, the mouse-cursor will have a cross-hairs icon which is used to place the feature label of the Blue tool. Click on a feature to label it.

Note: For more information, refer to the Create Feature Label (Feature Labeling). -

The feature label will have a default character of 0. Enter in a meaningful character (A-Z, 0-9, up to 140 characters) to identify the feature. For identical features, you can use the same identifier.

If there is more than one feature that you want to find in the image, identify and label each feature.

-

Go through each image in your image database and identify and label each instance of the feature(s).

Tip: To ensure the best results, be careful and thorough in this process, ensuring that you identify and label every instance of the feature. When labeling your features, ensure that you are very accurate and precise with each feature that you label. You are establishing the ground truth for the tool, and the tool's accuracy is a direct reflection of how accurately you label the features. See Create Feature Label (Feature Labeling) for the details of labeling. - Split the entire images into the training images and the test images. Utilize image sets to properly divide them into the training and test group. Add images to the training set.

- Select the images in the View Browser, and on the right-click pop-up menu click Add views to training set. To select multiple images in the View Browser, use the Shift + Left Mouse Button.

- Or, use Display Filters to show the desired images for the training only and add them to the training set by clicking Actions for ... views → Add views to training set.

- Prior to training, you need to set parameters in Tool Parameters. You can configure Training, Sampling, Features (if necessary), and Perturbation parameters or just use the default values of these. See Configure Feature Parameters and Sampling Parameters and Configure Tool Parameters for the details of the supported parameters.

- Ensure that the Feature Size parameter in Sampling parameters has been set. The Feature Size parameter gives the network a clue to the size of the features that you are interested in identifying. So, if the Feature Size parameter setting is clearly going off from the size of features in your application, there is a chance the tool will not identify the features in your image.

- If you want more granular control over training or processing, turn on Expert Mode on Help menu to initialize additional parameters in Tool Parameters.

- Once you have added desired images to the training set, press the Brain

icon to train the tool.

icon to train the tool.

- If you stop training in the middle of it by pressing the Stop

icon, you can stop training but you will lose the current tool so far trained.

icon, you can stop training but you will lose the current tool so far trained.

Tip: In addition to the following tips, consider using the bootstrap method of labeling your images. "Bootstrap" labeling refers to the process of beginning your labeling process by just labeling a small sample size of your images, then training the tool, and reviewing the results by accepting views with correct markings to convert them into labels, and removing incorrect markings and replacing them with correct labels. You can speed up this process further by dividing images into multiple views and only labeling a single view. In addition, you can also temporarily reduce the Epoch Count parameter (one of the ), which will make the training time shorter. - If you stop training in the middle of it by pressing the Stop

-

After training, review the results. Open Database Overview panel and review the Confusion Matrix and Precision, Recall, F-Score for each feature and each model to understand the results. See Interpret Results for the details of interpreting results.

- After reviewing the results, go through all of the images and see how the tool correctly or incorrectly marked the features and models for each image.

- If the tool has correctly marked the features, right-click the image and select Accept View.

If the tool has incorrectly marked the features, failed to identify a present feature, or placed a mismatch label to a feature, do the following:

Note: If the tool has placed one or more mismatched features (indicated by an orange outline), refer to the Mismatches topic.- Right-click the image and select Accept View.

- Right-click the image again and select Clear Markings and Labels.

- Manually re-label the features in the image.

- If you encountered the scenario in (10-a), you are ready to use the tool. If you encountered the scenario in (10-b), you will need to retrain the tool and repeat the steps 10 ~ 14.

The details of each step are explained in each subsection of Training Blue Locate.