Red Analyze High Detail

Overview of Red Analyze Architectures

The Red Analyze tool is used to inspect images, find defect pixels, and distinguish defective images from normal ones or vice versa. There are 2 types of architectures in Red Analyze Tool: Focused (Focused Supervised, Focused Unsupervised) and High Detail.

-

The Red Analyze Focused Supervised is used to segment specific regions such as defects or other areas of interest. Be it blowholes in cast metal or bruised vegetables on a conveyor; the Red Analyze tool in Supervised mode can identify all of these and many more problems simply by learning the varying appearance of the defect or target region. To train the Red Supervised tool, all you need to provide are images of the type of defective regions you are looking for.

-

The Red Analyze Focused Unsupervised is used to detect anomalies and aesthetic defects. Be it scratches on a decorated surface, incomplete or improper assemblies or even weaving problems in textiles; the Red Analyze tool can identify all of these and many more problems simply by learning the normal appearance of an object including its significant but tolerable variations. To train the Red Unsupervised tool, all you need to provide are images of good objects.

-

The Red Analyze High Detail is the reinforced version of Red Analyze Focused – Supervised in terms of segmentation performance but costs some drop in training and processing speed. Its higher performance compared to Red Analyze Focused – Supervised comes from its unique training architecture in segmentation tasks.

About Red Analyze High Detail

With Red Analyze High Detail, Red Analyze tool is also taught the appearance of defects but coming with a different architecture from Focused - Supervised mode. Similar to Green Classify High Detail mode, Red Analyze High Detail mode samples from the entire region of each view both in training and processing, which means that it does not use a feature sampler for training and processing.

This difference offers a trade-off between the performance or the speed of the training that is left for your choice, like Green Classify High Detail. Thus, if you need better, detailed results and if you can bear with a little slowdown in Training/Processing speed, Red Analyze High Detail - Supervised can be your option. It forms an explicit model of the different types of defects and thus it still needs both good and bad samples to train.

Overall, Red Analyze High Detail completes the same task as Red Analyze Focused Supervised but there are some differences in how it delivers the results. This means that Red Analyze High Detail also concentrates on teaching the network what defects look like as in Red Analyze Focused Supervised.

Architectures: Red Analyze High Detail vs Red Analyze Focused Supervised

High Detail mode uses an architecture which is different from that of Focused mode. Due to its architectural difference, it doesn't have Sampling Parameters in Tool Parameters pane as it samples from the entire view. Because of this, High Detail mode takes more time for Training/Processing than the Focused mode, but you can get more accurate or detailed results in pixel levels. The way you label images and create a model in High Detail mode is basically the same as Focused mode but there are some differences in tool parameters. As well as Red Analyze Focused Supervised, only the binary classes (Good/Bad) are supported in Red Analyze High Detail. It means that multiple classes are not supported.

When you label an image as Good in Red Analyze High Detail (in other words, an image that does not contain any defect regions), the tool will also use that image for training once it is added to the training set. In particular, the tool will attempt to train the network so that images labeled Good do not generate any defect responses. Adding labeled, defect-free Good images to your Training Image Set can help you validate the performance of the tool in classifying good and bad images.

| Focused - Supervised | High Detail (Supervised) | |

|---|---|---|

| Training /Processing Time |

Short | Long |

| Results |

Accurate |

More Accurate |

| Image Dataset Composition | Training Set, Test Set | Training Set, Validation Set, Test Set |

Supported Features vs Architectures

|

Features \ Architectures |

Red Analyze Focused Supervised, Red Analyze Focused Unsupervised |

Red Analyze High Detail |

| Loss Inspector | Not supported | Supported |

| Validation Set | Not used in training | Used in training |

| VisionPro Deep Learning Tool Parameters | Less parameters |

More parameters for control*, |

* More training and perturbation parameters for detailed training control and granular adjustment

Training Workflow for Red Analyze High Detail

When a Red Analyze tool is in Red Analyze High Detail mode, the training workflow of the tool is:

- Launch VisionPro Deep Learning.

- Create a new workspace or import an existing one into VisionPro Deep Learning.

- Collect images and load them into VisionPro Deep Learning.

- Define ROI (Region of Interest) to construct Views.

- If a pose from a Blue Locate tool is being used to transform the orientation of the View being used as an input to the Red Analyze tool, process the images (press the Scissors icon) before opening the Red Analyze tool. For more details, see ROI Options Following a Blue Locate Tool.

If necessary, adjust the Region of Interest (ROI). Within the Display Area, right-click and select Edit ROI from the menu.

- After adjusting the ROI, press the Apply button and the adjusted ROI will be applied to all of the images.

- Press the Close button on the toolbar to continue.

- If there is extraneous information in the image, add appropriate masks to exclude those areas of the image. Within the Display Area, right-click and select Edit Mask from the menu.

From the Mask toolbar, select and edit the appropriate masks.

After adding the necessary mask(s), press the Apply button and the mask will be applied to the current image.

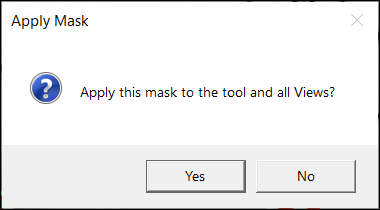

If you click Apply All and click Yes button on the ensuing Apply Mask dialog after adding the necessary masks, the same mask will be applied on all the images. If you click No on the dialog, the mask will not be applied and you go back to the Edit Mask window.

- Press the Close button on the toolbar to continue.

-

Go through all of the images and label the defects in the images. Make sure that all of the images have been labeled. See Create Label (Labeling) for the details of labeling.

- Split the entire images into the training images and the test images. Utilize image sets to properly divide them into the training and test group. Add images to the training set.

- Select the images in the View Browser, and on the right-click pop-up menu click Add views to training set. To select multiple images in the View Browser, use the Shift + Left Mouse Button.

- Or, use Display Filters to show the desired images for the training only and add them to the training set by clicking Actions for ... views → Add views to training set.

- Prior to training, you need to set parameters in Tool Parameters. You can configure Training and Perturbation parameters or just use the default values of these. See Configure Tool Parameters for the details of the supported parameters.

- Red Analyze High Detail mode doesn't have Sampling parameters as it doesn't use feature-based sampler but it samples from the entire pixel of each view.

- If you want more granular control over training or processing, turn on Expert Mode on Help menu to initialize additional parameters in Tool Parameters.

- If you want to change the proportion of the amount of data ceded to the validation set out of training set for the training with validation, change the Validation Set Ratio. See Prepare Validation Set for the details of the training with validation.

- Train the tool by pressing the Brain

icon.

- You can check the training status by monitoring Validation Loss with Loss Inspector . See Validation Set and Validation Loss for more details.

- If you stop training in the middle of it by pressing the Stop

icon, you can stop training with saving the current tool so far trained. You can later load this tool and process images with it, but you cannot continue the training from where the last training was stopped.

icon, you can stop training with saving the current tool so far trained. You can later load this tool and process images with it, but you cannot continue the training from where the last training was stopped.

- After training, review the results. Open Database Overview panel and review the Scores/ROC graph, the Confusion Matrix, and its F1 Score with switching over between categories in Count drop-down list. Review Precision, Recall, F-Score (Region Area Metrics) to understand the results in pixel level. See Interpret Results for the details of interpreting results.

- After reviewing the results, go through all of the images and see how the tool correctly or incorrectly marked the defects in the image.

- If the tool has correctly marked the features, right-click the image and select Accept View.

- If the tool has incorrectly marked the defects, or failed to identify a present defect:

- Right-click the image again and select Clear Markings and Labels.

- Manually label the defective pixels.

- If you want to tune the result by affecting the processing, you can manipulate Processing parameters and re-process by clicking Magnifier

icon to get the changed results. For example, to change the decision boundary which is determined by T1 and T2, you can manipulate the Threshold parameter.

- If you encountered the scenario in (a.), you are ready to use the tool. If you encountered the scenario in (b.), you need to retrain the tool and repeat the steps 8 ~ 11.

The details of each step are explained in each subsection of Training Red Analyze High Detail.